Building Tactician: PHP Scheduling with AI

December 18, 2025

At Tag1, we believe in proving AI within our own work before recommending it to clients. This post is part of our AI Applied series, where team members share real stories of how they're using AI and the insights and lessons they learn along the way.

Here, Jamie Hollern Senior Software Engineer, explores how AI supported his work to build Tactician, a modern PHP scheduling library, by generating a production-ready round-robin scheduler for tournaments and other structured pairing tasks.

Why I Built Tactician

For my esports platform Mission Gaming, I needed an efficient way to generate fair and balanced tournament schedules. Given that we were already running PHP for the organization, I wanted to stick with that language for this project if possible. More broadly, I wanted to see if AI-assisted development could help create a modern, extensible PHP solution to a problem space where PHP traditionally hasn’t had viable tools.

Generating structured schedules is a type of combinatorial problem, which means it’s not just about assigning players to matches, but doing so under a set of rules or constraints that make the schedule fair and workable. For example, we might want to prevent players from the same city or team from meeting early in a tournament. Each of these conditions becomes a constraint, and the AI helped me design and validate how those rules could actually work in code.

In languages like Python or C++, developers typically rely on powerful mathematical “solver” engines to crunch these problems quickly. PHP, however, doesn’t have a native constraint solver. Existing options like LP Solve haven’t been updated in a decade. That gap made this project all the more interesting.

The Challenge: Combinatorial Complexity

Combinatorial scheduling problems get very hard very fast. To illustrate, imagine you have 1,000 participants and want to group them in sets of four. The total number of possible combinations is over 41 trillion. Exploring all those options manually is both time-consuming and computationally expensive.

That’s the difference between a solver-grade system like Google’s CP-SAT Solver, which handles huge datasets in milliseconds and what PHP can practically do. PHP can still generate precise and deterministic schedules, but it does so by iterating through possibilities rather than solving them through advanced optimization algorithms.

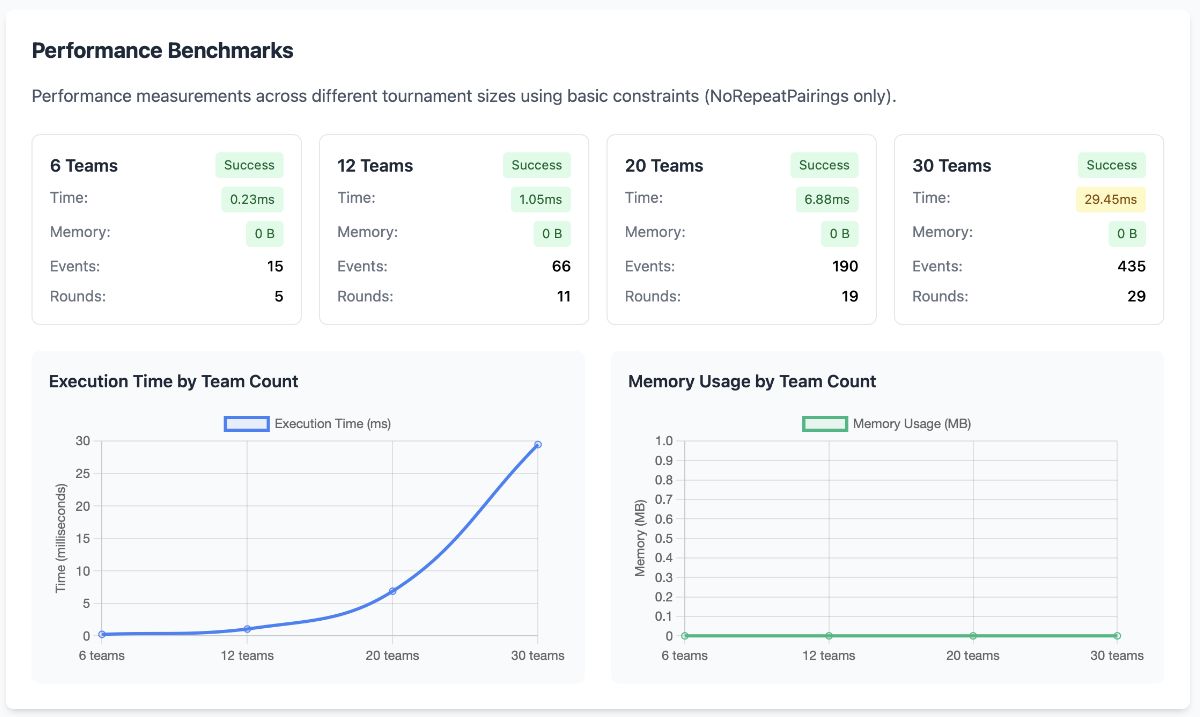

For smaller or mid-size use cases like tournaments, academic contests, and internal scheduling systems, this trade-off is completely acceptable. The results are reliable, and performance is well within a usable range (Figure 1).

How I Built It with AI

The entire Tactician project was built through AI collaboration using Cline (linked with my Claude Max subscription). A key piece of the workflow was its memory bank system. This provides a way to maintain structured context across sessions without bloating the active memory window. In practice, it meant the AI could “remember” key project architecture while safely forgetting irrelevant details between tasks. By relying on the memory bank documentation and splitting up the work into smaller tasks, I only hit Claude's context limit once across the whole development cycle.

Each feature was built through iterative planning. Before writing any code, Cline and I entered a “plan mode” where we discussed the design and trade-offs in plain language. Once we had a shared understanding, I asked it to implement the solution. This approach was especially effective for complex topics like constraint validation and multi-leg scheduling.

All code and documentation were generated by AI, with only minor human edits for clarity. The final repository, mission-gaming/tactician, includes:

- Over 90% test coverage

- Deterministic, mathematically correct round-robin scheduling

- Full PHPStan level 8 compliance

- Clean documentation and examples

Results: Speed, Quality, and Learnings

I reached a production-ready version in about 10 hours of AI-assisted work, something that would have taken weeks or months manually.

Quality-wise, Tactician surpassed expectations. The AI handled test scaffolding, documentation, and design patterns extremely well. What it lacked was domain awareness, or the deeper understanding of constraints and business rules that only a human can validate.

A few unexpected lessons stood out:

- AI performs best on narrow, self-contained problems. With no databases or frameworks involved, the project’s boundaries were clear, and the AI could focus entirely on logic and design.

- The memory bank workflow changed everything. It kept the sessions clean and significantly reduced the risk of hallucinations or duplicated logic.

- Human oversight remains essential. The AI wrote valid code, but sometimes merged multiple classes into a single file, which broke PSR-4 autoloading conventions. The code still ran, but that’s exactly why careful review is critical.

These insights confirmed my belief that the bottleneck in AI-assisted coding is shifting. The challenge is no longer in building, but in verifying.

Apply This Approach

The development method used here, combining context-driven AI development with structured memory systems, can help users quickly produce production-ready libraries or tools with almost no manual coding.

Benefits include:

- Rapid turnaround and iteration

- Lower development costs

- Consistent high-quality code with tests, standards, and documentation built in

Ideal use cases:

- Sports and esports tournaments

- Academic competitions (debate, hackathons, quizzes)

- Corporate or event team scheduling

- Recruitment or interview matching

For small to medium scheduling needs, this method is fast, reliable, and cost-effective. For massive datasets or solver-grade optimization, libraries like Google’s CP-SAT are still the right choice. PHP simply lacks the underlying engine to handle that type of scale efficiently (Figure 2).

Conclusion

Tactician shows that AI-assisted development isn’t just about faster code generation. It’s about better collaboration between human insight and machine consistency. In a space where PHP had very few tools, this project proved that practical innovation can come from small, focused experiments.

Bring practical, proven AI adoption strategies to your organization, let's start a conversation! We'd love to hear from you.

This post is part of Tag1’s AI Applied series, where we share how we're using AI inside our own work before bringing it to clients. Our goal is to be transparent about what works, what doesn’t, and what we are still figuring out, so that together, we can build a more practical, responsible path for AI adoption.