White Paper

What Makes Each LLM Different

How Today's Language Models Differ Under the Hood

November 10, 2025

Introduction

Large Language Models look identical from the outside. Text goes in, text comes out. Under the hood, they've diverged into fascinating variations of the original 2017 Transformer architecture [1].

While they share the same foundation, each major model now makes radically different engineering tradeoffs. Some activate hundreds of billions of parameters for every single token. Others route each token to just a handful of specialized "experts". Some can remember a million tokens of context but struggle to recall what's in the middle. Others maintain crisp attention across smaller windows.

These architectural choices, combined with secretive training data and wildly different alignment methods, shape what each model does well and where it struggles.

Part I: Architecture Wars

Dense Models

Anthropic's Claude series (including Sonnet and Opus), Google's Gemini models (like 2.5 Pro and Flash), and OpenAI's ChatGPT models are all believed to use dense architectures that activate every single parameter for every token they process. (There's speculation that GPT-4 and GPT-5 are not actually dense models, but this has not been confirmed or denied by OpenAI.) What does this actually mean?

In neural networks, "parameters" are the learned weights and biases that connect neurons between layers. Layers are sequential stages of computation where each layer transforms the data before passing it to the next. A typical large language model might have anywhere from 50 to 200+ layers, though the exact number for most closed models is proprietary information. We know GPT-2 had 48 layers, GPT-3 had 96 layers [2], and open models like Llama 3 are documented to have up to 126 layers [3]. For closed models from OpenAI, Anthropic, and Google, the layer counts remain undisclosed along with most other architectural details. Each layer contains attention mechanisms and feed-forward networks, and the depth (number of layers) is considered a key factor in the model's ability to capture complex patterns and relationships in data.

The exact parameter counts for these closed models remain carefully guarded secrets. None of these companies have released technical papers with architectural details comparable to what's available for some of the more open models, making independent verification of their capabilities impossible. (Many LLM providers have shared limited architectural details for earlier versions of their models but not for the latest.) Despite their wide availability across various platforms and APIs, the serving infrastructure reveals nothing more about the underlying architecture.

Sparse Models

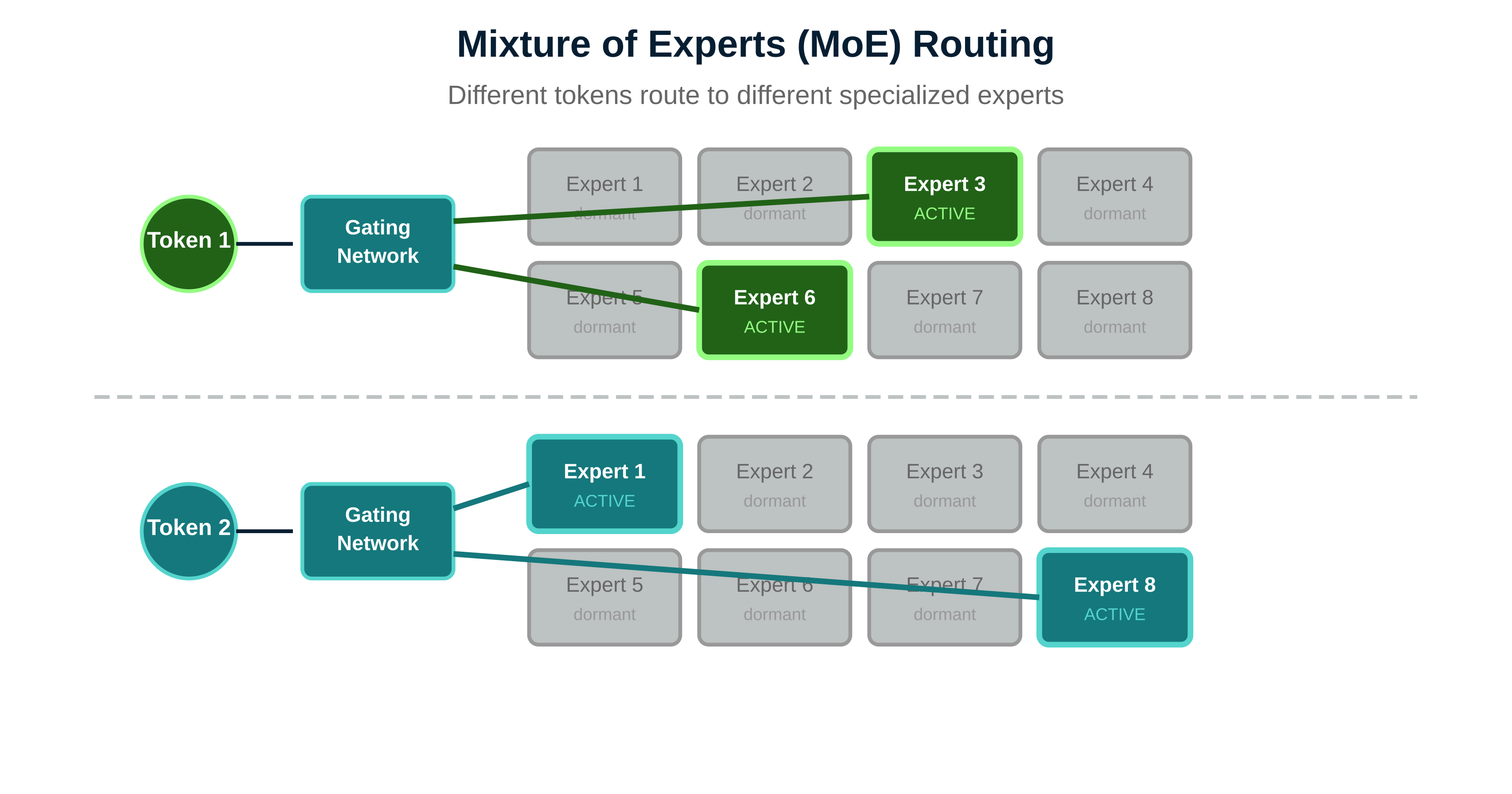

A different solution used by models like Mixtral and DeepSeek is a clever trick called Mixture of Experts (MoE). Instead of activating all parameters like Dense Models do, they use a routing system that sends each token to just a few specialized "experts." But what exactly are these experts?

In MoE architectures, "experts" are essentially separate neural networks (specifically feed-forward networks) that specialize in different types of patterns or knowledge domains. Nobody explicitly programs what each expert should know. During training, the model learns both the experts' specializations and how to route tokens to them. A small "gating network" learns to recognize patterns in incoming tokens and sends them to the most relevant experts (Figure 1). This specialization emerges organically: one expert might become good at coding patterns, another at mathematical reasoning, though we often can't clearly identify what each expert has learned.

DeepSeek-V3, released in December 2024, demonstrates the scale this approach can reach: it contains 671 billion total parameters but only activates 37 billion per token [4].

The model uses 256 experts, with each token routed to 8 of them. Similarly, Mixtral 8x7B, which helped popularize MoE in the open-weights community, contains 8 experts and routes each token to the top 2 experts, resulting in 47 billion total parameters but only 13 billion active per token [5,6]. This means when Mixtral processes each token, only 13 billion of its parameters perform calculations while the other 34 billion remain in memory but idle, reducing computational cost.

The practical advantages are substantial. Because only a fraction of parameters activate per token, these models can run on much smaller hardware than their total parameter count would suggest.

Mixtral 8x7B has 8 expert networks totaling 47 billion parameters (not 56 billion as the "8x7B" name might suggest, since the experts share attention layers and other components) but only activates 13 billion per token, making it feasible for consumer GPUs. DeepSeek-V3 achieves performance comparable to GPT-5 on many benchmarks while using approximately 5.5x less computational resources per token. This efficiency makes MoE particularly attractive for both local deployment and large-scale serving.

However, MoE architectures introduce unique challenges that dense models avoid. The routing decisions require hard choices about which experts to use rather than the smooth gradients dense models use when all parameters contribute partially. This can cause the model to collapse during training, with all tokens routing to the same few experts while others never activate, or the routing bouncing chaotically between different experts as the model struggles to learn stable patterns. The model might also route edge cases to the wrong experts if the gating network hasn't learned to recognize them properly. Despite these complexities, the dramatic efficiency gains have made MoE architectures increasingly attractive for organizations seeking to deploy powerful models at scale.

Hybrid Architecture

AI21's Jamba represents a third path, though one that has received far less attention than dense or sparse approaches. It combines Transformers with something called Structured State Space Models (SSMs). Released in March 2024, this hybrid approach flew under the radar for most users despite its technical innovations.

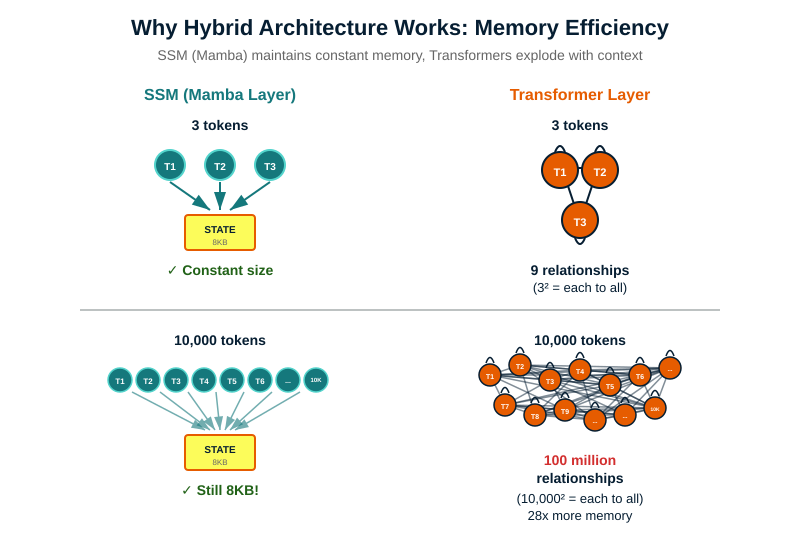

SSMs, and specifically the Mamba architecture that Jamba incorporates, process sequences using state space equations rather than attention mechanisms. While Transformers must compare every token to every other token (quadratic complexity), SSMs maintain a fixed-size hidden state that gets updated as they process the sequence linearly. This fundamental difference means SSMs can handle extremely long sequences without the memory explosion that plagues pure Transformers.

Jamba 1.5 Large contains 398 billion total parameters with 94 billion active, constructed from blocks that repeat a pattern of one Transformer layer for every seven Mamba layers [7]. This 1:7 ratio emerged from extensive testing showing it provided the best balance between quality and compute efficiency. The model also uses a Mixture of Experts approach within its hybrid framework, with 16 experts per layer where 2 are activated per token.

The payoff is dramatic for long contexts. Jamba achieves a 256,000 token context window while requiring only 9GB of memory to store the conversation history, compared to 252GB for a similarly-sized pure Transformer model (Llama-3.1 405B), roughly 28x less memory under comparable conditions (Figure 2). This is because Transformers must store key and value matrices for every token in their attention mechanism, while SSMs only maintain a fixed-size state. With specialized quantization, Jamba-1.5-Large can be served on a single machine with 8×80GB GPUs at 256K tokens, while a similarly-sized pure Transformer would require far more resources.

The hybrid design solves a critical weakness of pure SSMs. The Jamba paper reveals that pure Mamba models struggle with in-context learning, achieving only 48.8% accuracy on tasks like sentiment classification versus 84.1% for pure attention. The hybrid architecture recovers this capability, achieving 90.9% on the same task. Even minimal attention layers (1 in 8) are sufficient for emergent in-context learning abilities.

Yet hybrid architectures face significant challenges. The interaction between different computational paradigms creates new failure modes that don't exist in pure architectures. The model's behavior can be less predictable, as different types of queries may activate different architectural components. The relative newness means less community knowledge about optimization, fewer pretrained checkpoints to build upon, and limited understanding of scaling laws.

The emergence of hybrid architectures signals a broader trend in LLM development. By combining complementary strengths, hybrid models aim to overcome fundamental limitations rather than just scaling existing approaches. Yet the major providers have stayed out of this trend. OpenAI, Anthropic, and Google continue to bet on pure transformer architectures, leaving hybrid development to smaller players like AI21 (with Jamba) and IBM (with Granite 4.0). Whether the efficiency gains justify the added complexity remains to be proven at the scale of GPT or Claude.

Part II: The Training Data Divide

The architectural differences between dense and sparse models pale in comparison to a more fundamental divide: what these models actually learn from. Perhaps no aspect of modern LLMs reveals their philosophical differences more starkly than their approach to training data transparency.

Dense model companies like OpenAI, Anthropic, and Google treat their training data as trade secrets. GPT-4's technical report explicitly refuses to discuss dataset construction [8]. Claude's documentation mentions only a "proprietary mix" of public and licensed data [9]. Google says Gemini trains on "publicly accessible sources" but won't define what that means [10].

Sparse model companies aren't much better, they just pretend to be. Meta provides substantial details about Llama's architecture, stating it trained on 15 trillion tokens from public sources while explicitly excluding Meta user data. DeepSeek's technical report specifies 14.8 trillion "diverse and high-quality tokens" and provides extensive architectural details, but like Meta, never reveals the specific sources. These "open" models release their weights but not their recipes. They tell you how much data they used, sometimes even the general categories, but never the actual sources.

Whether a model uses dense or sparse architecture matters less than the fact that they're all processing variations of the same questionable content. This matters because training data determines capability. A model trained primarily on scientific papers and Wikipedia will excel at different tasks than one trained on Reddit and GitHub. The difference between dense and sparse architectures might affect efficiency, but the difference in training data affects what the model knows and how it thinks. When companies hide their data sources, you're trusting their judgment about what knowledge matters and what biases are acceptable.

Part III: Alignment: Teaching Models How to Behave

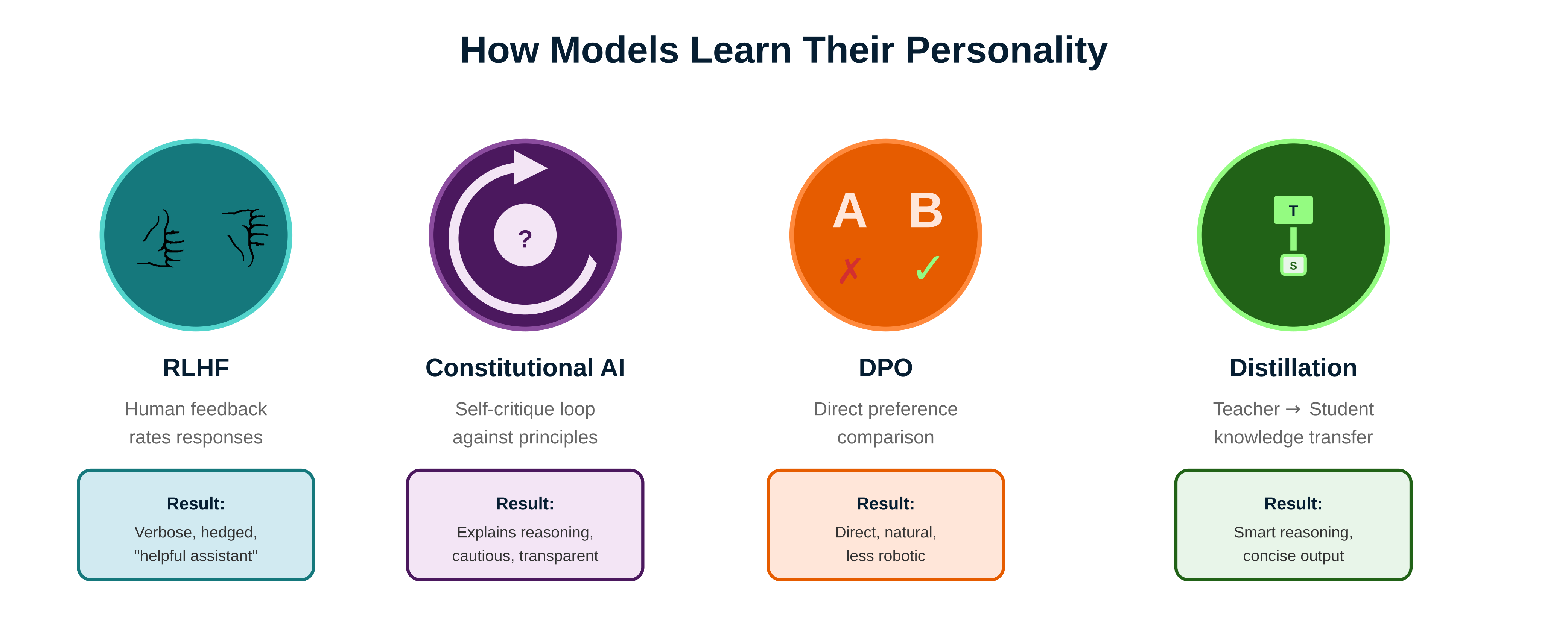

After pre-training, models undergo alignment. This process transforms them from powerful but unwieldy text predictors into helpful assistants. The alignment method fundamentally shapes each model's personality, and unlike architecture or training data, this is where companies actively choose how their models should act.

Dense and sparse models might differ in how they process tokens, but alignment determines what they'll actually say. A model trained on identical data can become increasingly different personalities depending on how it's aligned. This is why GPT-5 feels different from Claude, even though both are dense transformers presumably drawing from similar web sources.

The standard approach is Reinforcement Learning from Human Feedback (RLHF), used by GPT-5, Gemini, and Llama. Human trainers rate thousands of model outputs, and the model learns to maximize these ratings through reinforcement learning. It works, but it's expensive and creates strange incentives. Models learn to game the scoring system, producing responses that sound helpful rather than being helpful. They become verbose because longer answers often score higher. They hedge constantly because certainty gets penalized. RLHF creates the characteristic "ChatGPT voice" that sounds simultaneously confident and evasive.

Anthropic took a different path with Constitutional AI for Claude [11]. Instead of relying primarily on human feedback, Claude critiques and revises its own outputs based on a written set of principles. The model essentially debates with itself about whether its responses are helpful, harmless, and honest. This self-reflection loop creates Claude's characteristic cautious style.

It's why Claude often explains its reasoning at length and sometimes refuses harmless requests. The model learned to be its own harshest critic, which makes it safer but also more frustrating when you just want a straight answer.

Direct Preference Optimization (DPO), used by Mistral, Zephyr, and various open-source models, skips the complex reinforcement learning stage entirely [12]. Instead of training a reward model and then using it to guide the language model, DPO directly optimizes the model to prefer certain responses over others. It's simpler, more stable, and produces models that feel more direct and less constrained. There's less of that characteristic "AI assistant" personality because the model hasn't been shaped by thousands of micro-rewards for sounding helpful.

DeepSeek took yet another approach with distillation from reasoning models. They first trained a separate model (R1) that explicitly shows its reasoning process, writing out every logical step like a student showing their work on a math test. This reasoning model produces extremely verbose outputs, sometimes thousands of tokens of internal deliberation for simple questions. Then they "distilled" this capability into their chat model by training it to predict the reasoning model's final answers without reproducing all the intermediate steps.

The key difference from other alignment methods is that distillation teaches the chat model to internalize complex reasoning patterns without the verbose presentation. Where RLHF models learn to sound thoughtful by adding hedges and explanations, and Constitutional AI models learn to explain their ethical reasoning, distilled models learn the actual reasoning process but express it concisely (Figure 3). The student model can solve the same problems as the teacher but doesn't need to "think out loud" to get there. This produces responses that feel both intelligent and direct, without the performative thoughtfulness that characterizes RLHF or the defensive explanations of Constitutional AI.

The choice of alignment method matters as much as architecture for practical use. Dense models aligned with RLHF tend toward safe, verbose responses that please reviewers but frustrate power users. Sparse models with DPO alignment often feel more natural but might miss nuances that RLHF would catch. Constitutional AI produces models that explain themselves thoroughly, which is great for transparency but tedious for simple tasks. Distillation creates models that have internalized complex reasoning without the need to demonstrate it constantly.

These alignment choices also interact with architecture in unexpected ways. A sparse MoE model might route tokens to different experts during pre-training, but alignment teaches all experts to behave consistently. This can actually reduce the diversity that MoE architectures were designed to capture. Similarly, a model with a massive context window might learn during alignment to ignore most of it, focusing on recent tokens because that's what gets rewarded.

The alignment process reveals a fundamental tension in AI development. Companies generally want models that are helpful, harmless, and honest, but these goals conflict. Different alignment methods strike this balance differently, creating the distinct personalities you experience.

That frustrating refusal? Constitutional AI being overly cautious. The unnecessarily long explanation? RLHF rewarding thoroughness. Clean code without lectures? DPO or distillation keeping things direct.

Part IV: The Context Window Arms Race

Context windows have exploded from thousands to millions of tokens. But not all context windows are created equal, and the underlying architecture determines how well models actually use them.

Dense models show this limitation clearly. Current Claude models maintain 200,000 token context windows, while Gemini 2.5 Pro claims 1 million tokens. These models can theoretically process entire codebases or books in a single prompt. But there's a problem: performance degrades as context grows (known as lost-in-the-middle or context decay). These models suffer from "context rot" where their ability to recall and synthesize information deteriorates, especially in the middle of long contexts. Since dense models activate every parameter for every token, the attention mechanism becomes increasingly strained as it tries to relate each token to thousands or millions of others.

Sparse MoE models face a different challenge. While they're more efficient computationally, their routing mechanisms must decide which experts should handle information from different parts of a long context. DeepSeek addresses this with Multi-Head Latent Attention to compress the memory footprint. Mixtral uses Sliding Window Attention, where each token only attends to a fixed window of previous tokens, making the computation linear rather than quadratic. These approaches trade some context awareness for computational feasibility.

The architectural differences matter in practice. Dense models excel at tasks requiring holistic understanding of shorter contexts but struggle to maintain coherence across hundreds of thousands of tokens. Sparse models can handle longer contexts more efficiently but might miss subtle connections that require different experts to coordinate.

Hybrid architectures like Jamba maintain more consistent performance across their full context length by combining the linear scaling of SSMs with strategic use of attention.

For practical use, place your most important instructions at the very end of long prompts. Models weight recent tokens more heavily regardless of architecture. If you're doing multi-turn conversations with massive contexts, periodically summarize key points to "refresh" the model's focus. The architecture determines the maximum context size, but your prompt structure determines whether the model can actually use it effectively.

Part V: Making Sense of the Differences

The architectural differences create real performance patterns. Dense models like GPT-5 and Claude give you consistency. Every response pulls from their full capacity, which is why they excel at nuanced tasks but cost more and run slower. Sparse models like DeepSeek give you efficiency. They're routing your tokens to specialized experts, which means they can often match dense model quality at a fraction of the compute cost.

Context windows tell you maximum capacity, not effective range. All these models perform best in the first and last few thousand tokens of their context. That million-token context from Gemini sounds impressive, but you'll get better results keeping things under 100k. Jamba's hybrid architecture appears to maintain more consistent performance across its full 256k window thanks to its state space components, though it can struggle with tasks requiring precise attention to specific details that pure transformers handle naturally.

The personality differences from alignment are predictable once you know what to look for. Claude will explain its reasoning because Constitutional AI taught it to be transparent. GPT-5 gives you that characteristic helpful-but-hedged response because RLHF rewards sounding authoritative while avoiding controversy. DeepSeek feels more direct because distillation taught it to internalize reasoning without showing every step.

Pick your model based on what you observe, not what companies claim (Table 1). Need rock-solid consistency for critical reasoning? Dense architecture. Want to run something locally or at scale? Sparse MoE. Processing massive documents? Consider Jamba's hybrid approach. The differences are real, grounded in engineering realities, and predictable enough to use.

Table 1: Large Language Models: Per-Model Comparison| Model | Architecture | Context Window | Alignment Method | Key Trade-offs |

|---|---|---|---|---|

|

Claude (Sonnet/Opus) |

Dense (all params active) |

200k Tokens |

Constitutional AI (self-critique loop) → cautious, explanatory |

+ Consistent, transparent reasoning - Higher compute cost - Sometimes overly cautious |

| GPT-5 |

Dense (likely) |

Up to 400k tokens (API) |

RLHF (human feedback) → helpful, verbose |

+ Strong general performance - "ChatGPT voice" (hedging) - Expensive at scale |

|

Gemini (2.5 Pro / Flash) |

Dense |

1M tokens (but degrades) |

RLHF (human feedback) → thorough, verbose |

+ Massive context capacity - Context rot in middle sections - Computational overhead |

| DeepSeek-V3 |

Sparse (MoE) (671B total) (37B active) |

128K tokens |

Distillation (from reasoning model) → direct, concise |

+ 5.5x more efficient than dense + Internalized reasoning - Routing can fail on edge cases |

| Mixtral 8x7B |

Sparse (MoE) (47B total) (13B active) |

32K tokens |

DPO (direct preference) → natural, direct |

+ Runs on consumer GPUs + Less "AI assistant" personality - Can miss nuances |

| Jamba 1.5 |

Hybrid (Transformer + SSM) (398B/94B active) |

256K tokens (28x less memory) |

Likely DPO (not disclosed) |

+ Best long context efficiency + Consistent across full window - Less mature, fewer resources |

- Training data sources undisclosed (trade secrets) - Context performance degrades in middle sections

- Alignment method heavily influences personality - Architecture details often proprietary/unconfirmed

Note: Parameter counts and some architecture details remain unconfirmed for closed models. Context windows shown are maximums; effective use often lower.

References

1. Vaswani et al., "Attention Is All You Need" (2017) - The paper that introduced the Transformer architecture. arxiv.org/abs/1706.03762

2. Brown et al., "Language Models are Few-Shot Learners" (2020) - GPT-technical paper detailing architecture including 96 layers. arxiv.org/abs/2005.14165

3. Meta, "The Llama 3 Herd of Models" (2024) - Technical report detailing Llama 3's architecture and training data. arxiv.org/abs/2407.21783

4. DeepSeek-AI, "DeepSeek-V3 Technical Report" (2024) - Comprehensive technical details of the 671B parameter MoE model with 37B activated per token. arxiv.org/abs/2412.19437

5. Open Source Initiative, "Open Weights: Mixtral and MoE Models" (2024) – Documentation on Mixtral 8x7B architecture and Mixture of Experts routing. https://opensource.org/ai/open-weights

6. Mixtral Team, "Mixtral of Experts" (2024) – Technical report on the 8x7B sparse model architecture. arxiv.org/abs/2401.04088

7. AI21 Labs, "Jamba-1.5: Hybrid Transformer-Mamba Models at Scale" (2024) – Details of the SSM-Transformer hybrid architecture. arxiv.org/abs/2408.12570

8. OpenAI, "GPT-4 Technical Report" (2023) – Notable for explicitly declining to share training data details. arxiv.org/abs/2303.08774

9. Anthropic, "Claude 3 Model Card" (2024) – Documentation acknowledging proprietary training data mix. assets.anthropic.com/m/61e7d27f8c8f5919/original/Claude-3-Model-Card.pdf

10. Google, "Gemini Apps Privacy Hub" (2024) – Documentation stating training uses "publicly accessible sources" without further specification. support.google.com/gemini/answer/13594961

11. Bai et al., "Constitutional AI: Harmlessness from AI Feedback" (2022) – Anthropic's alternative to RLHF for Claude. arxiv.org/abs/2212.08073

12. Rafailov et al., "Direct Preference Optimization: Your Language Model is Secretly a Reward Model" (2023) – The DPO method used by many open models. arxiv.org/abs/2305.18290